AI Accelerators Without the Hype: Why Memory Traffic (Not TOPS) Decides Real Performance

AI Accelerators Without the Hype: Why Memory Traffic (Not TOPS) Decides Real Performance

Let’s talk about something almost every AI hardware company shows off: TOPS (Tera Operations Per Second).

It's the number that gets circled on slides, spotlighted in press releases, yelled from expo booths.

"50 TOPS!"

"500 TOPS!"

"1000 TOPS!"

But if you’ve ever tried deploying a real model on one of these accelerators, you already know the frustrating secret:

TOPS don’t tell you how the chip will perform in the real world.

Because real models don’t run in isolation. They run inside full systems. And the part that makes or breaks AI performance isn't compute, it's data movement.

The real bottleneck in AI hardware is memory traffic, not MAC count.

Let’s unpack that. Simply. Honestly. From the perspective of someone who actually builds and deploys edge AI systems, not someone who just markets them.

Why TOPS Look Good but Don’t Matter

TOPS is a peak theoretical number.

It assumes your model always has weights and activations ready, already loaded, and that there’s no overhead when adding, moving, or recycling data. That’s not how the real world works.

In reality:

- Your compute cores spend most of their time waiting for data.

- The memory bus gets crowded.

- Off-chip DRAM fetches cause latency spikes.

- Power shoots up because you’re constantly transferring data back and forth.

So even a fancy “100 TOPS accelerator” might deliver only a fraction of that under real workloads, especially at the edge.

When you’re building products like wearables, smart sensors, hearing devices, or medical patches, those gaps can ruin everything, power budget, thermals, responsiveness, UX.

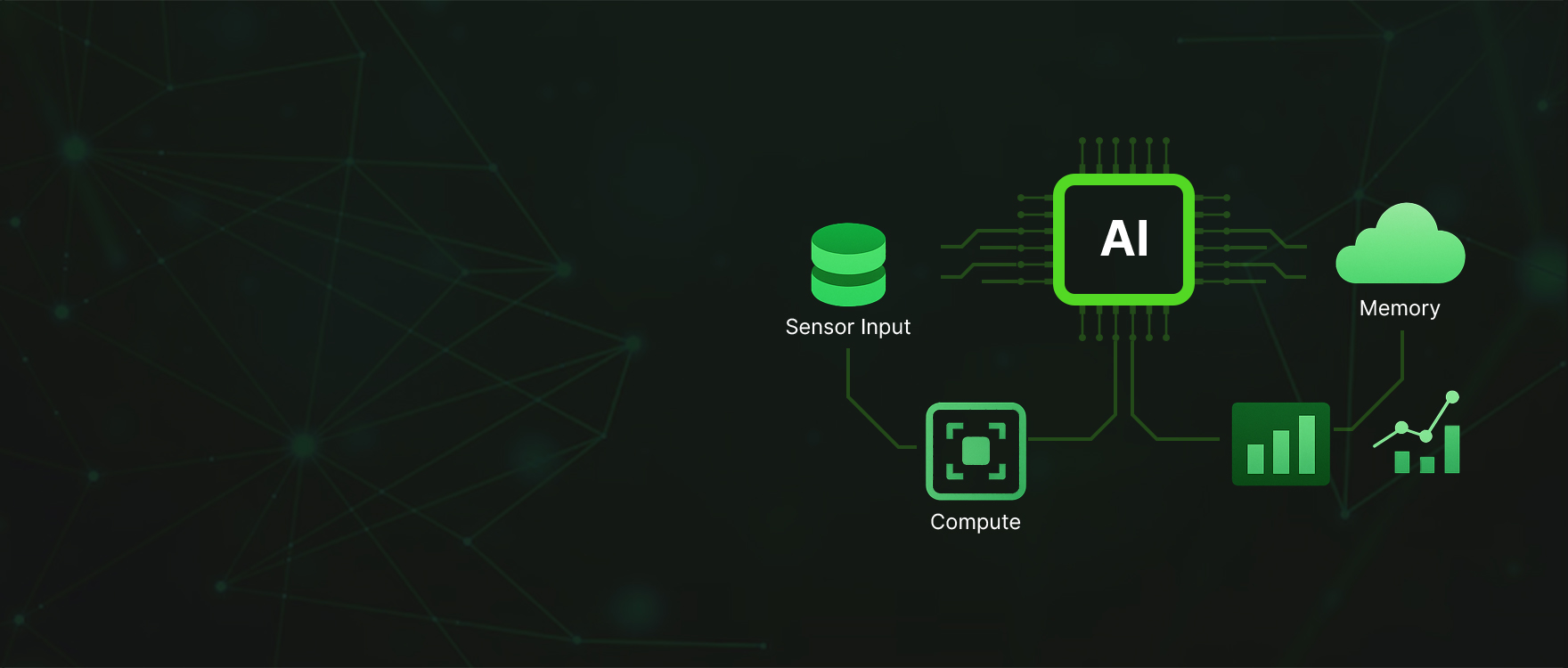

The Real Problem: Data Has to Move Before It Gets Processed

Every AI workload, no matter how simple or big, follows the same loop:

- Grab data from a sensor.

- Move it into memory.

- Load it into compute units.

- Store the output.

- Move it again for the next layer.

Every one of those steps costs time and energy. When you’re doing that for every activation map, every weight tile, every batch of input, it adds up. Fast.

When you add DRAM into the loop? It gets even worse. External memory access can be 50 - 100x slower and more power-hungry than on-chip SRAM. That’s why designs that rely heavily on external memory struggle to perform well in real-world AI, especially real-time AI.

So, the real question isn’t:

“How fast can your chip compute?”

It’s:

“How many times does your chip need to move memory around just to compute anything at all?”

Edge Workloads Make the Memory Problem Even Bigger

If you’re building something that lives on the edge where you don’t have server-grade cooling, or full wall power, or streaming internet memory becomes the first thing that breaks.

Typical edge use cases

- Keyword spotting on low-power earbud

- Heart rate classification on a wearable

- Glass break detection in smart home sensors

- Early fault detection in industrial motors

All of them involve small models. All of them should run fast on paper.

But they often don’t because the memory subsystem can’t keep up.

What’s the point of having a 50 TOPS chip if it’s starving 90% of the time waiting for data?

This is why most AI accelerators fail when they leave the lab.

Fix the Bottleneck. Don’t Ignore It.

Let’s be clear, we didn’t build chips and then think about memory.

We built chips “around” memory.

Our DigAn® architecture processes signals, features, and inference in-place, inside the memory array itself. No constant shuttling of data. No DRAM dependency. No unnecessary movement of activations or weights.

The result?

- No compute starvation

- No thermal blowups

- No 80% idle MAC pipelines

- Real performance, every cycle, under 100 µW in many use case

Instead of chasing higher TOPS, we chased lower data movement.

Instead of treating memory as a cost, we turned it into a compute substrate.

That’s why the GPX10 processor and its siblings don’t act like a cut-down data center chip, they act like real-time, localized intelligence engines made for edge computing.

If that idea interests you, you’ll love this deep dive: https://www.ambientscientific.ai/

Choosing the Right AI Accelerator: What Really Matters

Forget TOPS. Ask these instead:

- Where do activations and weights live during execution?

- Does the model stay in on-chip memory?

- How often does the compute pipeline stall waiting on DRAM?

- Can the device run AI without a CPU/MCU handling pre-processing?

- What’s the power draw during continuous inference, not peak spec?

If the answers aren’t specific, measurable, and proven, the chip won’t perform in production.

Why This Matters If You Build AI Products

If your product have to:

- Run off a battery

- Stay cool

- Make fast decisions

- Adapt to real signals

- Last months or years in the field

Then you don’t need more TOPS. You need architectural honesty.

And that’s the gap Ambient Scientific is filling making AI that doesn’t live in the cloud or drain a battery. AI that’s built where the data is, not where the marketing slides are.

Want to Build AI That Actually Works Where It Runs?

If you're building edge AI wearables, diagnostics, audio devices, industrial sensors, or smart implants; we should talk.

Our chips and SDK are made for exactly this kind of product.

Contact Us and take the first step toward real performance and not theoretical performance.

.svg)

.png)